Today, we’re announcing a significant change to how Effective Experiments handles experiment outcomes. We’re moving away from the traditional “successful,” “unsuccessful,” and “inconclusive” terminology in favour of more precise language: “Hypothesis Validated,” “Hypothesis Not Validated,” and “No Change.”

This isn’t just a cosmetic update—it’s a fundamental shift toward more rigorous, learning-focused experimentation that challenges one of the most damaging narratives in our industry.

The “Success” Problem: How Experimentation Lost Its Way

Over the past decade, experimentation has been increasingly sold as a magic bullet for growth. CRO agencies and optimization teams learned to speak the language that stakeholders wanted to hear: quick wins, guaranteed improvements, and an endless stream of “successful” tests.

The promise was seductive: implement our testing program, achieve a high “win rate,” and watch your metrics soar. Success became the currency of credibility, and velocity became the measure of competence.

But this success-obsessed narrative has created some deeply problematic habits:

The Win-at-All-Costs Mentality

When teams are judged primarily on their success rate, the incentive structure becomes clear: find ways to make tests “successful” rather than generate reliable insights. This leads to HARK-ing, an apt term that shows up as:

- Cherry-picking metrics until something shows statistical significance

- Stopping tests early when they’re trending positive

- Post-hoc rationalization of why unexpected results are actually “wins”

- Avoiding risky hypotheses that might challenge assumptions

- Redefining success criteria after seeing results

The Velocity Trap

The obsession with running more tests faster has created a culture where quantity trumps quality. Teams celebrate shipping 50 tests per quarter while struggling to explain how those tests influenced any meaningful business decisions.

This velocity-first approach often results in:

- Tests without clear hypotheses

- Insufficient sample sizes and statistical power

- Minimal follow-up on implementation

- No systematic learning or knowledge building

The Magic Beans Promise

Perhaps most damaging of all, experimentation was oversold to stakeholders as a guaranteed growth engine. “Implement our CRO program and achieve a 30% conversion lift!” became the standard pitch.

This created unrealistic expectations and set up a dynamic where practitioners feel pressured to deliver “successful” results rather than honest insights about what works, what doesn’t, and what requires further investigation.

Why Language Matters: The Science of Hypothesis Testing

The terminology we use shapes how we think about the work. When we label experiments as “successful” or “unsuccessful,” we’re implicitly making a value judgment about the quality of the work based on the outcome.

But here’s the fundamental truth: a well-designed experiment that disproves your hypothesis is just as valuable as one that confirms it.

In scientific method, hypothesis testing isn’t about winning or losing—it’s about learning. When we test a hypothesis, there are three possible outcomes:

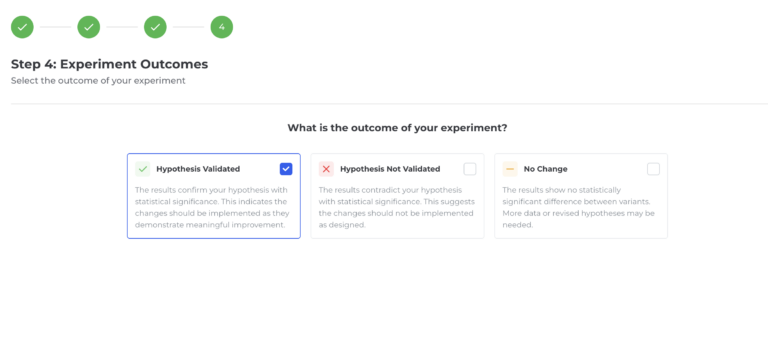

- Hypothesis Validated: The results support our hypothesis with statistical confidence

- Hypothesis Not Validated: The results contradict our hypothesis with statistical confidence

- No Change: The results show no statistically significant difference

Each outcome provides valuable information. A validated hypothesis gives us confidence to implement changes. An invalidated hypothesis saves us from implementing changes that don’t work. And “no change” results help us understand the boundaries of what matters to our users.

What Changes in Effective Experiments

Starting today, you’ll see this new terminology reflected throughout the platform.

This change reinforces our core belief that experimentation governance should focus on the quality of insights generated, not the direction of results achieved.

The Bigger Picture: Toward Mature Experimentation

This terminology shift is part of a larger movement toward experimentation maturity. Organizations that have built truly strategic testing programs understand that:

- Learning velocity matters more than test velocity

- Methodology rigor creates more value than optimistic results

- Strategic alignment trumps tactical optimization

- Long-term insight accumulation beats short-term metric improvements

The companies seeing the greatest impact from experimentation are those that have moved beyond the “quick wins” mentality toward systematic, hypothesis-driven learning programs.

What This Means for Your Team

If you’re currently using Effective Experiments, you don’t need to take any action—the platform will automatically use the new terminology going forward. But we encourage you to consider how this shift might influence your team’s approach:

For Practitioners:

- Focus experiment proposals on the quality of the hypothesis rather than the likelihood of “success”

- Celebrate rigorous methodology regardless of results direction

- Build learning narratives that connect insights across multiple experiments

For Managers:

- Evaluate team performance based on insight quality and strategic impact rather than win rates

- Create psychological safety for testing hypotheses that might not validate

- Emphasize the long-term knowledge building over short-term metric optimization

For Executives:

- Recognize that mature experimentation programs generate value through learning, not just through positive results

- Invest in governance and methodology rather than just test volume

- Align experimentation with strategic decision-making rather than tactical optimization

A Challenge to the Industry

We hope this change sparks broader reflection in the experimentation community. It’s time to move beyond the simplistic success/failure framing that has limited our industry’s potential.

True experimentation maturity means embracing uncertainty, valuing rigorous methodology, and building systematic learning capabilities that compound over time. It means having the intellectual honesty to say “our hypothesis was not validated” instead of hunting for ways to declare victory.

The organizations that make this shift—from success-obsessed to learning-focused—will be the ones that realize experimentation’s true strategic potential.